[AINews] OpenAI launches Operator, its first Agent • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

Memes and Humor on AI Twitter

AI Model Development and Multi-GPU Support

Latent Space Discord

Discussions on Windsurf AI Features and Performance

Building and Fine-Tuning Agentic IDEs

Perplexity AI Sharing

GPT Outage, Voice Feature Issues, and Humorous Blame Game

Nous Research AI Interesting Links

GPU Mode Discussions

Cohere API Discussions

User Experiences and Discussions on NotebookLM Integration

Social Media and Newsletter Links

AI Twitter Recap

Summary

-

OpenAI's Operator Launch: OpenAI introduced Operator, a computer-using agent capable of interacting with web browsers for tasks like booking reservations and ordering groceries. It is praised for handling repetitive browser tasks efficiently by users like @sama and @swyx.

-

DeepSeek R1 and Models: DeepSeek AI unveiled DeepSeek R1, an open-source reasoning model that outperforms competitors on 'Humanity’s Last Exam'. It is commended for its transformer architecture and performance benchmarks by @francoisfleuret.

-

Google DeepMind's VideoLLaMA 3: VideoLLaMA 3, a multimodal foundation model for image and video understanding, was open-sourced for research and application by @arankomatsuzaki.

-

Perplexity Assistant Release: Perplexity AI released Perplexity Assistant for Android, integrating reasoning and search capabilities. Users can activate the assistant and utilize features like multimodal interactions.

-

AI Benchmarks and Evaluation:

- Humanity's Last Exam: @DanHendrycks introduced Humanity’s Last Exam, a 3,000-question dataset to evaluate AI's reasoning abilities. Current models score below 10% accuracy, indicating room for improvement.

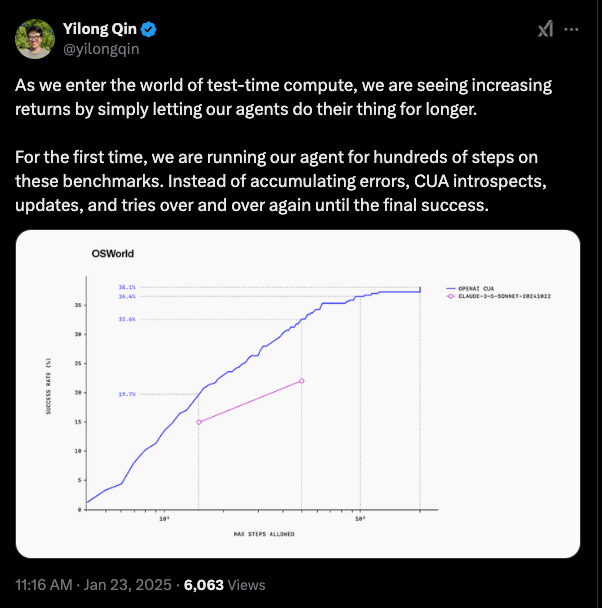

- CUA Performance on OSWorld and WebArena: @omarsar0 shared the performance results of Computer-Using Agent (CUA) on OSWorld and WebArena benchmarks, showing improved performance over previous models but still trailing human performance.

- DeepSeek R1's Dominance: Multiple tweets from @teortaxesTex highlighted DeepSeek R1’s superior performance on text-based benchmarks, surpassing models like LLaMA 4 and OpenAI's o1 in various evaluation metrics.

-

AI Safety and Ethics:

- Citations and Safe AI Responses: @AnthropicAI launched Citations, enabling AI models like Claude to provide grounded answers with precise source references, enhancing output reliability and user trust.

- Overhype and Hallucinations in AI: @kylebrussell criticized the overhyping of AI technologies, emphasizing that errors should not lead to a dismissal of AI advancements.

- AI as Creative Collaborators: @c_valenzuelab advocated for viewing AI as creative collaborators rather than tools, highlighting the importance of subjective and emotional evaluation in artistic endeavors.

-

AI Research and Development:

- Program Synthesis and AGI: @TheTuringPost explored program synthesis as a pathway to Artificial General Intelligence, combining pattern recognition with abstract reasoning to overcome deep learning limitations.

- Diffusion Feature Extractors: @ostrisai reported progress on training Diffusion Feature Extractors using LPIPS outputs, resulting in cleaner image features and enhanced text understanding.

- X-Sample Contrastive Loss (X-CLR): @DeepLearningAI introduced X-Sample Contrastive Loss, a self-supervised loss function that assigns continuous similarity scores to improve contrastive learning performance.

-

AI Industry and Companies:

- Stargate Project Investment: @saranormous discussed the $500B Stargate investment aimed at boosting compute power and AI token usage, questioning its impact on intelligence acquisition and industry competition.

- Google Colab’s Impact: @osanseviero highlighted Google Colab's role in democratizing GPU access, fostering advancements in open-source projects, education, and AI research.

- OpenAI and Together Compute Partnership: @togethercompute highlighted the partnership between OpenAI and Together Compute.

Memes and Humor on AI Twitter

On Twitter, various humorous tweets related to AI were shared. One tweet joked about Magic and D&D being included in 'Humanity’s Last Exam,' implying that LLMs might replace Rules Lawyers. Another tweet reflected on AI's capabilities through memes, highlighting the lighter side of AI advancements. Additionally, there was a humorous debate questioning the trustworthiness of Elon Musk compared to Sam Altman in the realm of AI leadership. Another tweet shared a funny take on AI-generated content, showcasing the quirky outcomes when AI models interact with user prompts.

AI Model Development and Multi-GPU Support

Developers discussed advancements in AI model development and multi-GPU support within the Discord channels. Unsloth AI hinted at future updates to support large-scale training and reduce single-GPU bottlenecks. Users reported depleting flow credits due to AI-induced code errors. Dolphin-R1 was sponsored for open release. A BioML postdoc aimed to adapt Striped Hyena for eukaryotic sequences. NVIDIA's RTX 5090 was praised for speed but criticized for power consumption. The CUDA 12.8 release excited developers with new architecture support. Discussions highlighted VRAM demands for training large models. Users demanded transparent fee structures for better cost management.

Latent Space Discord

- Operator Takes the Digital Wheel: OpenAI introduced Operator, an agent that autonomously navigates a browser to perform tasks. Observers tested it for research tasks and raised concerns about CAPTCHA loops.

- Imagen 3 Soars Past Recraft-v3: Google’s Imagen 3 claimed the top text-to-image spot, surpassing Recraft-v3. Community members highlighted refined prompt handling.

- DeepSeek RAG Minimizes Complexity: DeepSeek reroutes retrieval-augmented generation by permitting direct ingestion of extensive documents. KV caching boosts throughput.

- Fireworks AI Underprices Transcription: Fireworks AI introduced a streaming transcription tool at $0.0032 per minute after a free period. They claim near Whisper-v3-large quality with 300ms latency.

- API Revenue Sharing Sparks Curiosity: Participants noted that OpenAI does not credit API usage toward ChatGPT subscriptions. They wondered if any provider pays users for API activity.

Discussions on Windsurf AI Features and Performance

Users in the Windsurf Discord channel shared their experiences and concerns related to the AI tool. Some users reported issues with significant depletion of flow credits and errors while using Windsurf. Login and permission problems were encountered by several users as well. Discussions also revolved around developing mobile apps with Windsurf, feedback on AI model performance compared to Sonnet 3.5, and privacy concerns with new tools like Trae. The community expressed the need for consistent AI results and raised questions about data privacy risks.

Building and Fine-Tuning Agentic IDEs

The discussion revolves around the development and optimization of various AI models, particularly the deep-seek models like R1 and Qwen. Key points include the strong performance of DeepSeek R1, upcoming multi-GPU support for Unsloth to enhance training efficiency, and the need for fine-tuning models like DeepSeek Qwen for non-English languages. Challenges in biological tokenization and the importance of evaluating models before fine-tuning are also highlighted. Links to relevant resources and discussions on improved hardware setups and model training strategies contribute to a comprehensive understanding of building and refining agentic IDEs.

Perplexity AI Sharing

This section discusses various topics related to Perplexity AI, such as PyCTC Decode projects, Mistral's IPO plans, DeepSeek R1 model comparisons, music streaming project discussions, and inquiries about AI prompts. Users share insights, references, and feedback on these subjects. The discussion touches upon the development of Perplexity Assistant, operator and agents introduction, API issues, API SOC 2 compliance, Sonar model comparisons, and retrieving old responses by ID. It also covers positive feedback on API updates, model standardization improvements, and addressing premature announcements.

GPT Outage, Voice Feature Issues, and Humorous Blame Game

The 'gpt-4-discussions' section discusses the challenges faced by GPT during an outage, including bad gateway errors and voice feature unavailability. Members humorously blame LeBron James and Ronaldo for the downtime. Status updates from OpenAI indicate ongoing fixes, with some light-hearted blaming games reflecting the community's reaction. The section showcases how users coped with the service disruption through humor and discussion around the blamed individuals.

Nous Research AI Interesting Links

Evabyte's Chunked Attention Mechanism:

- The Evabyte architecture utilizes a fully chunked linear attention method that compresses the attention footprint alongside multi-byte prediction for optimized throughput. For detailed implementation, refer to the source on GitHub.

- An illustrative sketch highlights this architecture.

Innovative Tensor Network Library:

- A new ML library based on tensor networks has been developed, featuring named edges instead of numbered indices for intuitive tensor operations like convolutions.

- This library employs symbolic reasoning and matrix simplification, outputting optimized compiled torch code, enhancing performance with techniques like common subexpression elimination in both forward and backward passes.

- The creator of the tensor network ML library is seeking user feedback to improve usability, inviting users to give it a spin and share their experiences.

Advanced Features:

- The library promises various advanced features, including symbolic expectation calculations relative to variables.

- This innovative tool aims to streamline tensor operations while providing intricate enhancements that could greatly benefit ML workflows.

GPU Mode Discussions

In the GPU Mode section, various discussions were held regarding different topics related to GPU technologies. Here are some highlights:

- Concerns were raised about AI models being easily jailbroken, leading to potential risks.

- Members discussed DDoS attacks using conversational AI and the challenges of protecting AI models.

- Issues with step execution order and data overwriting were addressed in CUDA kernels.

- The release of CUDA Toolkit 12.8 with support for Blackwell architecture and new tensor instructions was highlighted.

- Discussions on selecting GPUs, running large models locally, and multi-GPU training strategies were ongoing.

- ComfyUI's search for machine learning engineers, the launch of the Tiny GRPO repository, and new Reasoning Gym project were shared.

- Performance tips, community contributions, and cloud computing options were also discussed.

- The section covered various links to resources, including tutorials, tools, and community events.

Cohere API Discussions

A member inquired about the location of Cohere API endpoints due to experiencing latency from Chile and suspected a topological issue. The possibility of mounting Cohere Reranker models on-premise or accessing a region close to South America was also discussed. Another member suggested directing inquiries to the sales team at support@cohere.com.

User Experiences and Discussions on NotebookLM Integration

Users share their experiences and insights on integrating NotebookLM into their study workflow. One user excitedly shares their journey on week three, while another member learns about Obsidian's markdown capabilities through a YouTube video shared in the community. Concerns are raised about audio generation prompts defaulting to general content and issues with saving notes in NotebookLM. The ongoing discussions highlight the community's knowledge sharing and workflow exploration. Additionally, links to resources and tools mentioned in the discussions are provided for further exploration.

Social Media and Newsletter Links

The webpage includes links to the Twitter account of Latent Space Podcast, an external website for the newsletter, and AI News on Twitter. It also mentions the platform Buttondown for newsletter management.

FAQ

Q: What is the purpose of OpenAI's Operator Launch?

A: OpenAI's Operator is an agent designed to autonomously interact with web browsers to perform tasks like booking reservations and ordering groceries efficiently.

Q: What is DeepSeek R1, and why is it important in AI research?

A: DeepSeek R1 is an open-source reasoning model known for surpassing competitors on challenges like 'Humanity’s Last Exam'. Its transformer architecture and performance benchmarks make it a significant advancement in AI research.

Q: What is VideoLLaMA 3 by Google DeepMind, and why was it open-sourced?

A: VideoLLaMA 3 is a multimodal foundation model for image and video understanding developed by Google DeepMind. It was open-sourced to facilitate research and practical applications in the field.

Q: What are the key features of Perplexity Assistant released by Perplexity AI?

A: Perplexity Assistant is an AI tool for Android that integrates reasoning and search capabilities. Users can activate the assistant to benefit from features like multimodal interactions.

Q: What is Humanity's Last Exam, and what is its significance in evaluating AI?

A: Humanity's Last Exam is a dataset of 3,000 questions introduced to assess AI's reasoning abilities. Current models are scoring below 10% accuracy, indicating the need for improvement in AI reasoning capabilities.

Q: What are some ethical considerations discussed in the AI community?

A: Ethical discussions in the AI community include topics such as providing safe AI responses through tools like Citations by @AnthropicAI, cautioning against overhyping AI advancements, and promoting AI as creative collaborators rather than mere tools.

Q: What AI advancements were highlighted in the AI Research and Development section?

A: Advancements mentioned include exploring program synthesis for achieving Artificial General Intelligence, progress in training Diffusion Feature Extractors for enhanced understanding, and the introduction of X-Sample Contrastive Loss for improved contrastive learning performance.

Q: What industry trends were discussed in the AI Industry and Companies section?

A: Topics like investments in AI projects such as the Stargate Project, the impact of Google Colab in democratizing GPU access, and partnerships like OpenAI's collaboration with Together Compute were highlighted in the AI Industry and Companies section.

Q: What were some humorous discussions related to AI on Twitter?

A: Humorous tweets on AI included jokes about AI technologies being tested in 'Humanity’s Last Exam', memes reflecting on AI capabilities, debates on the trustworthiness of AI leaders like Musk and Altman, and humor around AI-generated content.

Q: What were the key advancements discussed in the context of the Evabyte's Chunked Attention Mechanism?

A: The Evabyte architecture utilizes a fully chunked linear attention method for optimized throughput, and an innovative Tensor Network Library based on tensor networks introducing named edges. These advancements aim to streamline tensor operations and enhance ML workflows.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!